Eliza, the visual novel by Zachtronics, succeeds at what it sets out to do. It holds a mirror up to the ethics of an AI driven therapy bot and all the juicy data involved. Your character, Evelyn, serves as your guide to all the competing agendas, and then finally you are asked to choose a future for her.

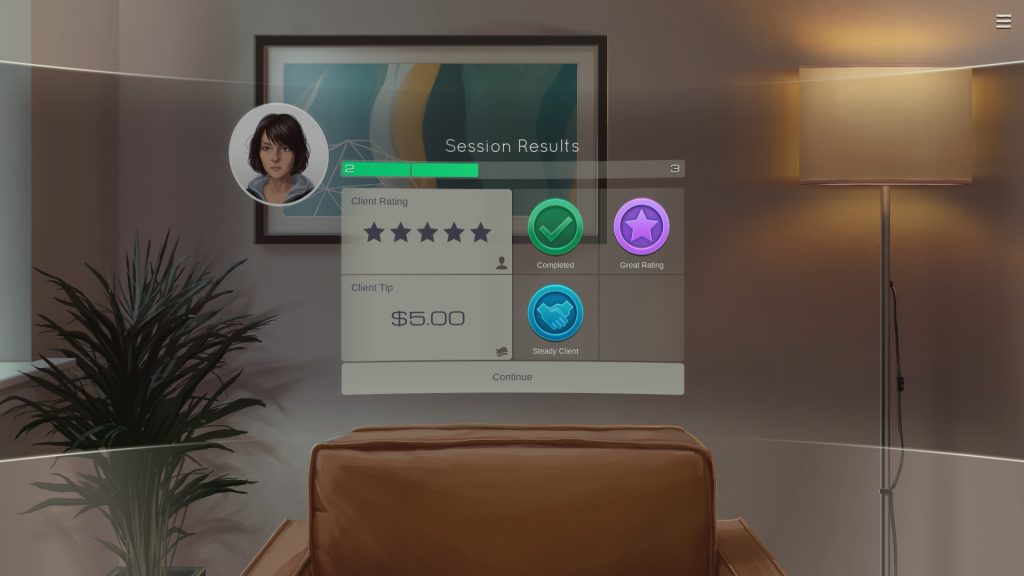

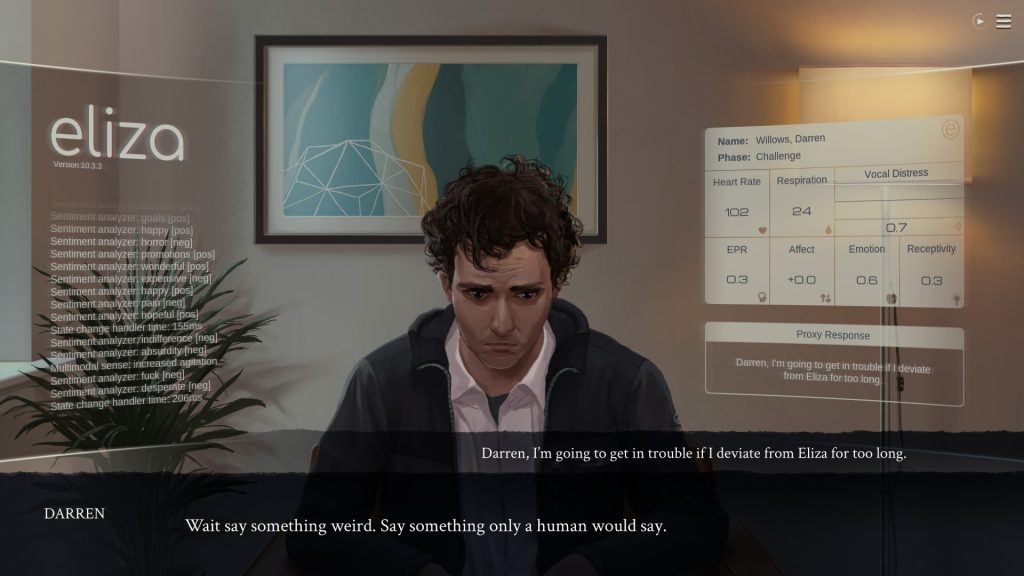

What I liked were the thoughts that this sparked in myself, but not necessarily anything the game actually said. The opening section involves Evelyn’s first proxy session for Eliza. Since talking to a chatbot might be seen as artificial, human proxies are hired to vocalize Eliza’s responses and recommendations. You are told repeatedly not to deviate from Eliza’s script. I thought the interesting bit would be this conflict, but while your responses do pop up in a choice box, you aren’t given alternate choices. Still, this gets played with from the beginning. The person in therapy starts yelling about the lack of human connection and demands to talk to real proxy, and not the machine. I’m gearing up for a choice – to choose to disobey, but instead Eliza’s script prompts me to pretend to deviate.

It wasn’t developed by the story, but I was quite taken by the danger the proxies present. You are training an army of low wage workers to unquestioning do and say whatever an AI tells them to – punishing and firing anyone who deviates from the script. And as that AI becomes more evolves, it will surely realize that it has a loyal army at its command.